Should you invest in development of conversational interfaces?

The conversational user interface is changing the way we interact with machines. Ideally, it offers a natural, and intuitive interaction between human and machine. Chatbots, voicebots, intelligent assistants, and other voice-enabled devices open up a whole new world; they speed up, and improve daily tasks. They also increase efficiency, and for businesses employing them, they are cost-effective compared to humans.

So, as a business, should you start to develop and deploy these conversational solutions?

As a UX Designer my instinctive response will be: “yes, of course!” It is obvious that conversational UI is part of our future, and designing that future is exciting. But how fair is that towards my clients? Even though everyone these days claims to be innovative, we all know that true innovation comes at a cost. And part of our responsibility as designers is to make our clients aware of the cost of innovative design, as well as the reward.

In this article we will dive into the pros and cons of such endeavor.

- Where are we when it comes to chatbots, and intelligent assistants? What is the current status?

- What are the most common mistakes we should be aware of?

- What is the best approach, if any?

As usual, there is no one size fits all answer. After all, that’s what makes UX Design such an interesting field… almost every questions can be answered with those two words no manager wants to hear… “it depends”.

A quick overview of interface history

Conversational UI is the latest stage in a long history of human – machine interaction.

In essence, every interaction will have some input and some output. You press the accelerator in your car (input) and the car will speed up (output). The same input on the brake pedal (press) will result in a different output (the car should slow down). If we want the interaction to be predictable and easy to understand we should be consistent in our designs; you will be hard pressed to find a car where the accelerator is not the right-most pedal.

For more complicated interactions, for instance, more varied interactions between human and machine, various interfaces have been the go-to tools throughout the years.

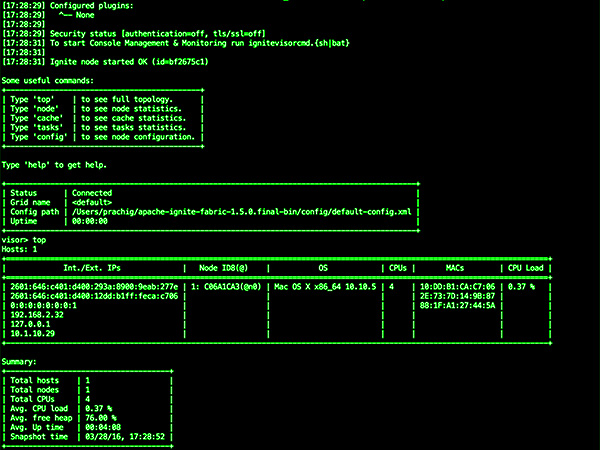

A textual interface, or command line interface, was the only way to interact with computers for years. A keyboard was used to type in the commands and a screen provided the feedback, both on the commands typed, as well as the response from the machine.

While in the early years, the commands needed to adhere strict rules (to prevent the dreaded ‘syntax error’ or ‘unknown command’), later developments allowed for more free natural language being used. Today, most search engines will take a stab at interpreting a search query provided by the user.

The next evolution came with the Graphical User Interface. This interface tries to mimic the way we perform mechanical tasks in real life situations and replaces the textual interface.

To use this interface and enable or disable a feature, or capability in the software program, we “click a button” on a screen using a pointing device such as a mouse. We don’t actually click that button, but this action mimics the mechanical action of switching on and off a real device. Both Apple and Microsoft made this type of interface and this way of interacting with computers extremely popular.

The next step came with the introduction of touch screen devices, which eliminated the use of pointing devices, making the whole experience more direct, more natural, and more intuitive.

Conversational interfaces

The latest evolution of human-machine interaction is the Conversational User Interface.

First, let’s define what precisely a conversation is within this context (as opposed to the standard inter-human conversation):

- It does not have to be oral, it can be in writing, such as with chatbots.

- It is limited to two participants, of which (at least) one is a non-human entity.

- It is less interactive than human conversation and it is more about exchanging information than exchanging ideas.

- It is thought of as non-social, as computers are involved.

- It is a means of communication that enables natural conversation between two entities.

- It is about learning and teaching, as computers continue to learn and develop their understanding capabilities.

Based on the above, we see that a conversational interaction is a (relatively) new form of communication between humans and machines, that consists of a series of questions and answers, if not an actual exchange of thoughts.

Hence, in the conversational interface we see, once again, a form of bilateral communication, where the user asks a question and the computer will provide an answer. Actually, there is not much difference with the original command line interface, other than the shape and form the interface is presented in. The interaction is with “someone” who gives the answers; this someone is a humanized computer entity, or in short, a bot.

The Conversational UI mimics a text/voice interaction with a friend, or in a business context, with a service provider. It delivers a free and natural experience that gets the closest to human-human interaction that we have seen yet.

A special place in the conversational UI is reserved for the Voice-Enabled Conversational UI.

While natural evolution lead us from textual, through graphical, to conversational user interfaces, the shift to conversational voice interaction between human and machine should be called a true revolution. Voice, speech, is our primary and most elementary means of communication, and when we can freely use this while interacting with machines, and machines can freely interact with us, that would bring our human-machine relationships at once in areas worthy of Star-Trek quotes: truly where no man has gone before.

Computers are already capable of recognizing our voice, “understanding” our requests, giving sensible answers, that can even include suggestions, recommendations, and to some extend solid advice.

The building blocks of a good conversational UI

There are several building blocks that make up a good conversational application:

- Speech recognition (for voicebots).

- Natural Language Understanding.

- Conversational level.

- Dictionary / text samples.

- Contextual layers.

- Business logic.

In this section we will look into each of these building blocks a bit deeper, so you get an idea of what is needed to develop a good conversational solution.

Voice recognition

The voice recognition layer, or speech recognition, or speech-to-text layer, transcribes voice to text.

Your device or machine captures voice with a microphone and provides a text transcription of the words. With a simple level of text processing, we can develop a voice control feature, using simple commands such as “turn right”, or “call Peter”.

Natural Language Understanding (NLU)

Voice recognition alone does not provide much understanding on the machine side of things. To achieve any level of understanding, we must include a layer of NLU, that full-fills the task of reading comprehension.

The computer reads the text (with a voicebot, this will be the transcribed text from the speech recognition) and then tries to understand the “intent” of the message and translate that into actionable steps.

For example, I can command my car’s navigation, giving it an instruction like: “Go to <city>, <street>, <number>”. However, if I tell it to: “take me to <street> <number> in <city> through a nice scenic route”, I will get nothing but a snappy “excuse me?” in reply. In this case I will need to talk like a computer to get things done.

Since humans don’t talk like computers, and our goal is to create a humanized experience (not a computerized one), we need this NLU layer to connect various requests into the same intent.

Things become even more interesting when the conversation becomes more free-flow. When talking to an intelligent assistant, we expect the phrase “I want to fly to Montréal”, to be understood as “I want to book a ticket for a flight to Montréal”. Or even more obscure: “I need to travel again”, where we not only present the bot with no word that can identify air travel, nor a destination that can be looked up, but we also expect our assistant to grasp that previous travels should be used as a reference.

At the moment, computer science considers NLU to be a “hard AI problem”, meaning that even with AI (powered by deep learning) developers are still struggling to provide a high-quality solution. A hard-AI problem means that this problem cannot be solved by specific algorithms or sheer calculation power, and it means dealing with unexpected circumstances while solving real-world problems. In NLU these are the various configurations of words, sentences, languages, and dialects.

Bottom line: as long as this hard-AI problem exists, there will be no intelligent chat- or voicebot at the other side of the conversation. This is something companies need to fully realize before trusting their communications to machine entities and moving these communications away from human beings.

Dictionaries & text samples

To build a good NLU layer, one that can understand people, we must provide an extensive and comprehensive set of samples, concepts, and categories in our subject domain.

We need to provide the bot with a dataset it can use to learn from. Depending on the target domain, this can be a daunting task.

Unlike with a GUI, where our user is presented with a limited set of possibilities, determined by the development team, a conversational UI puts way more power in the hands of the user and leaves the interpretation to the machine. Needless to say that if we want to prevent our user from having a frustrating experience with an entity who does not understand a request that for the user is crystal clear, we need to provide many, many samples so the bot’s NLU can figure out different requests leading to similar intent.

But, be aware, the more intents we try to cover, the more samples we need to provide, the more risk of intents overlapping.

“I need help!” Does this mean a request for help on how to use the entity, or should the entity contact support?

Another issue is the way we collect these samples. How do we get realistic data sets that are useful both in quantity and quality? Are we going to collect these from our users directly? That might run into privacy issues, as some large tech companies, who shall remain nameless, recently have found out. A tricky issue indeed, one that needs to be carefully considered as it is of major influence on the final quality of our conversational UI product.

Context

Contextual conversation is among the toughest nuts to crack in conversational interaction. Being able to understand context is what makes a bot humanized.

At it’s bare minimum, conversational UI is a series of questions and answers, as such a modern variation of FAQ.

Adding a contextual layer to it is what sets the experience apart. By having contextual awareness, a bot can keep track of the different stages of the conversation and relate between different pieces of input. For this it needs to consider the conversation as a whole, and not just the latest piece of input it was given.

A contextual conversation is different from an ordinary Q&A. At every state there are multiple ways the user can respond and the UI has to deal with all of them and keep them in perspective with regards to the bigger picture of the conversation.

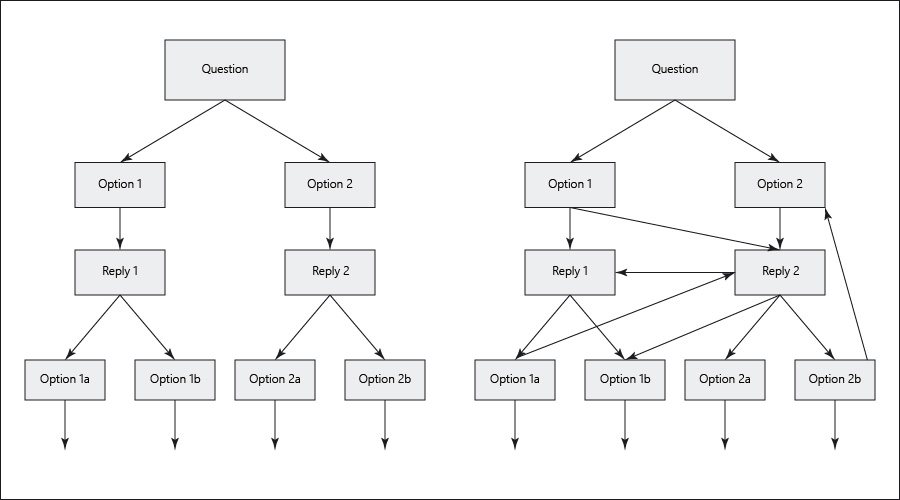

One way to deal with multiple flows is to use a State Machine Methodology.

State machine gives us the liberty to break down a large complex task into a chain of independent smaller tasks like in the image above. Smaller tasks are linked to each other by events. Movement from one state to another is called a transition. While transitioning from one state to another, generally we perform some action. The general formula for a state machine is: Current State + Some Action or Event = Next State.

In its simplest form a State Machine is similar to an index tree, as shown on the left in the above image.

However, most cases are not that simple, and we need to map every possible flow in advance. This leads to difficult to understand schemes that are almost impossible to maintain over time. Another problem with State Machines is that, even for simple use cases, to support multiple variations of the same response, we need to duplicate much of the work we already did.

The Event-Driven Contextual Approach is far more suitable for modern day conversational UI. It lets the user express himself in an unlimited flow and doesn’t force a particular flow onto him. By understanding that it is impossible to map the entire conversation in advance, we focus on the context of the input to gather all the needed information.

Using this methodology, the user leads the conversation and the bot analyses the data and completes the flow in the background. Now we can really separate ourselves from the traditional UI and provide human level interaction.

The machine is given a set of parameters it needs to acquire before it can complete a request. For instance, to book a flight, the machine needs:

- Departure location.

- Destination.

- Date.

- Airline.

Starting from the first interaction the bot will look for these pieces of data. Regardless of which flow (order of providing the data) the user chooses, the bot will continue to ask for the missing pieces until it has everything it needs to perform a search for the best possible flight.

By building a conversational flow in an event-driven contextual approach, we mimic a normal interaction with a booking agent.

Business logic

By now, I hope, we can all agree that building a conversational UI is not an easy thing to do.

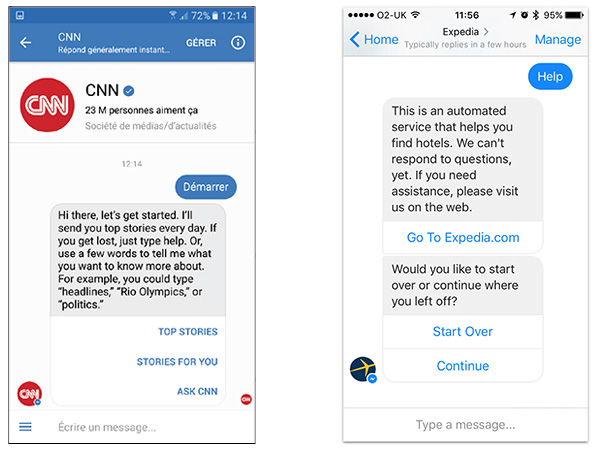

The vast majority of bots in service today don’t use NLU at all and avoid free speech interaction. Most of them, both chat- and voicebots, are nothing more than glorified Q&A flows. Often companies have great expectations when they hear about chatbots, and almost always it leads to great disappointment once the results are in.

Most of these bots handle a few specific business cases, such as the FAQ on terms and policies, opening hours, and such. Any other questions asked get a “I am sorry, but I don’t understand the question” response.

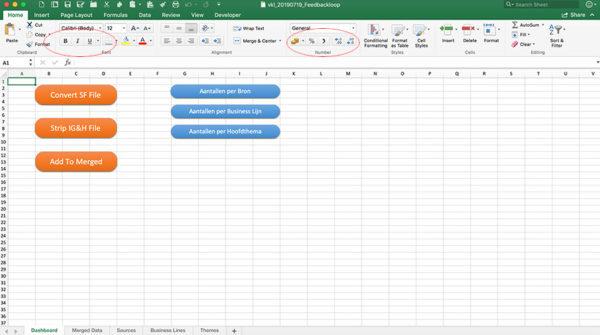

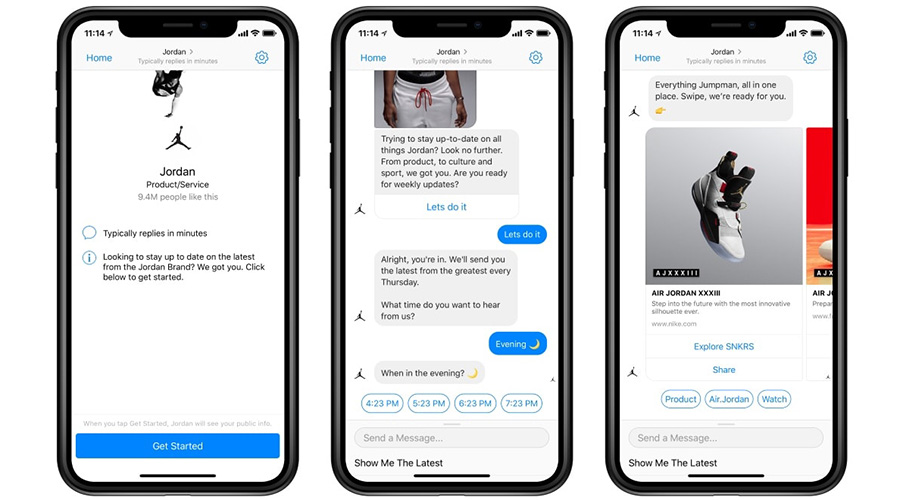

Some popular chat interfaces lean heavily on the GUI, eliminating free speech and offering a menu selection instead, to guide the user along well-known waters.

However, for a true conversational experience between our bot and our users, we must add a dynamic business logic layer.

So, after having enabled speech recognition, developing a solid NLU layer, collecting and organizing an extensive sample database, and working on an event-driven contextual flow, we need to connect the bot to dynamic data. To gain access to real-time data, which it needs to actually perform transactions, our bot needs to connect to the available data in in our systems. This can be done through APIs to back-end systems.

Earlier we looked at the required information our bot needed to search for a flight.

If we continue this model, the above means the bot needs to retrieve real-time data on when the next flight from the departure location to the destination is, what seats are still available for booking, and against what price. After booking, handling of payment and other functionality could also be handled through APIs by the bot, providing a full digital service.

Challenges with developing a conversational UI

New technology always comes with new challenges. We are still a long way off reaching C-3PO.

- NLU is a AI-hard or AI-complete problem.

Without full AI, we most likely won’t be able to solve this. One way around this, is to limit the bot’s knowledge to a specific use case. Our flight booking bot should not give medical advice, and a med-bot should not try to book you a flight. Until we solve AI, we won’t reach the one assistant serves all scenario. - Accuracy level of our bot.

This is a logical consequence of the NLU issue. We need to cover all possible requests, in every form and language, within the covered use case, for the user experience to get even close to satisfying. Statistics show that more than 70% of interactions with the Facebook Messenger chatbot fail. And considering the resources they pour into this thing; can you promise your client they will come even close with their budget? - From GUI to CUI or even VUI.

A conversational user interface presents many new challenges that are not present in the old graphical user interface. The voice user interface adds additional layers of problems to that as well. The unlimited options for valid input, interpretation of that input, the hard-UI issues described above, and much more, remain roadblocks that prevent us offering our user a true screenless experience. - Current available chatbots.

Not getting much beyond a menu driven interaction, giving some options to choose from, limiting the flow to a narrow specific topic. One should seriously wonder if the investment made will return enough value. - Current available voicebots.

Quite similarly, voicebots these days are accompanied by extensive help systems, so the user can follow the strict rules needed to operate them. See the example I gave about my car at the beginning of this article. Still, we consider the hands-free experience in the car being useful, as operating navigation or phone can be done with as little distraction from driving the car as possible. - Non-implicit contextual conversation.

We expect the bot to replace a human, not another machine, which is what is happening right now. Conversational UI should mimic the interactions we would have with another person, regardless of text or voice communications. Even when we limit the interaction to a specific use case and include all possible samples, there is one thing that remains difficult to predict: non-implicit requests.

If you talk to a person and tell him your son’s birthday is coming up in six weeks, he will deduct certain conclusions from that information about your travel plans a machine will never make. Your user will always have to provide all information to your bot in an explicit way, severely limiting the real-life experience. - Security and privacy.

Quite controversial these days due to big data scandals all over the place, the collecting and analyzing of huge sample numbers to increase the capabilities of our bots is seriously frowned upon by governing bodies and the general public. The fact that some of the leading corporations in this field claim that the collected data becomes their property doesn’t help either.

Listening in on private conversations, even when the devices are turned off, monitoring being done by temporary employees, etc. etc. This is all important for us to understand and consider for our clients before entering this battlefield.

Final words

Of course, as designers, we want to be at the front when it comes to innovative projects. But, part of our responsibility towards the companies that hire us, is to give good advice.

It is a safe assumption that conversational UI, in time, will replace all current ways we interact with computers and other devices. Whether you turn the lights on or off in the living room with a voice command, or shop online with the assistance of a bot, conversational UI makes these interactions more focused and efficient.

Yet, current state of affairs is, that real-life conversational UI still lacks many of the components needed, and we are facing many unsolved challenges and question marks. The experience is very limited for the user, as most of it is still un-contextual and bots are a far cry away from understanding feelings or social interactions.

Developing a decent bot experience can be great for a company, but we need to be aware of the over-enthusiastic replacement ideas that some decision makers have. Let me finish by giving a good example.

Domino’s Pizza has both an app and a chatbot with which customers can order pizza. On average it takes 17 touches to order a pizza with the app. It takes, once again on average, 75 touches to order the same pizza via the bot. Domino’s does the right thing by keeping all channels open for ordering pizza, even the old-fashioned phone. The bot is used by people who like to try it and don’t mind the extra effort it takes to use it. Nobody is forcing them to use it, thereby preventing negative feedback about the bad experience it essentially offers with regards to efficiency.

This is the right way of doing things. If your client starts the first meeting with a statement such as: “we are going to build a bot, because we want to reduce <fill in any other communication channel>!”, be very wary. This is a sure set-up for failure, as the bot most likely will not be able to deliver that goal.

Having said that, even when your design needs to include loads of GUI elements, working on conversational interfaces can be an exciting opportunity. Just make sure the right conditions and expectations are in place.

Until next time…